Standing on the AWS re:Invent show floor last week, you could feel the market shifting. Not the slow, incremental kind of change the enterprise security world usually produces, but something faster and more fundamental.

The conversations, the booth demos, the executive discussions all circled back to the same question: if software agents are about to be everywhere, what’s securing them? What are the guardrails?

AWS positioned this year's re:Invent squarely around AI infrastructure and agentic systems. The keynotes hammered on enterprise AI adoption, new foundation models, and the compute layers needed to run them at scale. But woven through all of it was a second, quieter theme: the architecture that secured the last generation of software won't hold up for this one. When your environment includes not just humans and services but autonomous agents that plan, decide, and act across systems, your security model has to evolve from static perimeters to dynamic, contextual access. And that shift is happening now.

How we got here

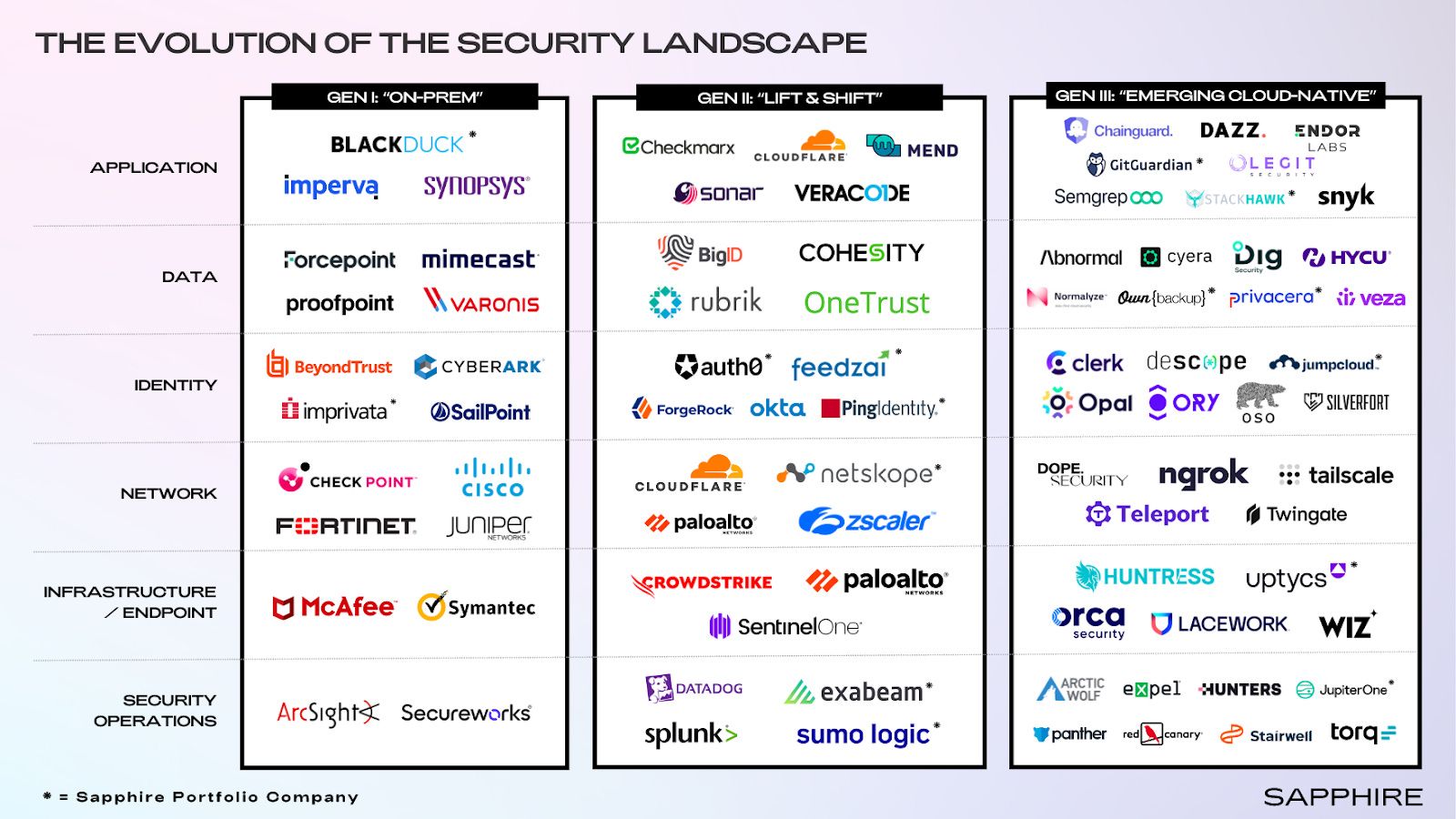

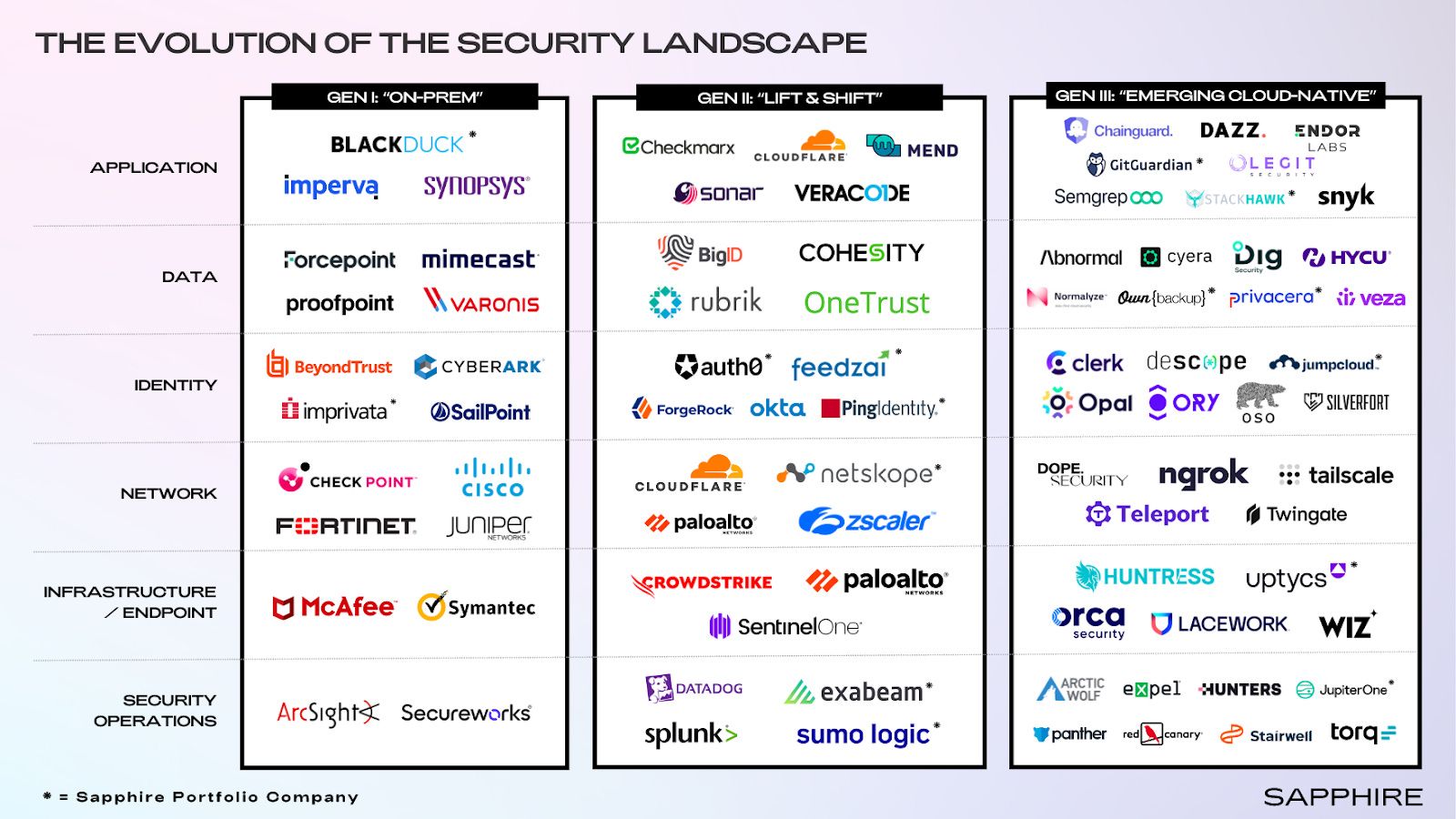

The security industry has moved through three distinct architectural generations, as Casber Wang from Sapphire Ventures outlined in his blog: Securing the Future: The Next Wave of Cybersecurity – and re:Invent felt like the moment Gen III stopped being theoretical and became operational reality.

Source: casber.substack.com

Source: casber.substack.com

Gen I was perimeter-based security: firewalls, on-premise identity systems, and endpoint controls designed for a world where apps lived in datacenters and users worked inside corporate networks. It made sense for what it secured until the environment expanded beyond what those controls could handle. The datacenter didn't vanish, but suddenly it was just one piece of a much larger, distributed puzzle.

Gen II brought those same controls into the cloud. Cloud WAFs, cloud IAM, cloud-native endpoint detection. It solved the scale problem but introduced a new one: tool sprawl. We ended up with overlapping policies, fragmented visibility, and security teams managing multiple dashboards instead of reducing actual risk. "Cloud-enabled" isn't the same as cloud-native, and most Gen II tools have that distinction in production.

Gen III is different because the architecture is different. It treats identity as the control plane instead of the perimeter. Security isn't a wrapper anymore; it's embedded into APIs, developer workflows, and the runtime itself. Controls are continuous, not point-in-time. Automation drives remediation, not ticket queues. And the foundational assumption flips. Instead of trusting the network and verifying at the edge, you trust nothing and authenticate everything from humans, to microservices, and now AI agents, based on identity and context.

That's the model AWS was describing in its security sessions this year, and it's the model reflected on the show floor. The vendors getting traction aren't selling better versions of legacy applications. They're selling a fundamentally different approach to how security fits into modern systems.

The backdrop: Infrastructure, network, data, identity

AI workloads are clarifying what Gen III needs to look like because they stress-test every layer of the stack. Start with compute: AI systems are elastic, distributed, and hardware-accelerated by default. The partnership announcements around GPU orchestration platforms like NVIDIA's Run:ai showed how training and inference workloads are becoming programmable, policy-aware, and dynamically scheduled across hybrid environments. The business value there emphasizes higher utilization, faster iteration, lower cost per model. The security implication is less obvious but more important: when your compute layer is that fluid, static perimeter controls become meaningless. You need security that moves at the same speed as the workloads, expressed as code and enforced through APIs.

The data layer is facing similar pressure. Agentic systems don't just read data—they generate massive transaction volumes as agents query, write, coordinate, and make decisions across distributed environments. Traditional databases weren't built for that pattern. CockroachDB showed up in conversations at re:Invent precisely because they've architected for this future: a distributed SQL database designed for global scale, strong consistent transactions, and resilience without manual sharding or complex replication topologies. When you have thousands of agents acting concurrently across regions, you need a data layer that can handle the scale and distribution without becoming a bottleneck or a single point of failure. The database has to be as elastic and distributed as the compute and network layers around it.

The network layer is no simpler. AI teams run multi-cloud by default. GPUs get deployed wherever they're available or affordable, with the rest of the infrastructure typically living elsewhere. That geographic and vendor distribution breaks traditional network security, which assumes a clear boundary between "inside" and "outside." Tools like Tailscale are showing up in AI infrastructure because they simplify secure connectivity across distributed environments. They let teams connect compute, data, and services without the friction of traditional VPNs. But connectivity isn't the same as identity. When your compute nodes are ephemeral and your inference fleets scale dynamically with demand, you need more than just secure tunnels. You need every connection anchored to a verifiable identity with scoped permissions and audit trails, which is where the network layer ends and the identity layer begins.

All three layers: compute, data, and network are converging on the same requirement: distributed, ephemeral infrastructure that can't rely on location or static configuration as the trust anchor. That shift was already happening before AI, but agentic systems make it unavoidable because they introduce a fourth problem that legacy security was never designed to handle: non-human actors with real decision-making authority. And that's where identity becomes non-negotiable – not as a feature of the stack, but as the control plane that authenticates every actor and authorizes every action.

The missing layer: AI agent identity

This is where Ory enters the picture, and why our presence at re:Invent matters. The Gen III shift requires identity systems built for more than humans. When AWS talks about agentic AI and they positioned it as a strategic direction throughout the event, they're describing systems that autonomously plan, select tools, and execute actions. Those agents aren't passive scripts. They're active principals operating with privileges, and they need the same identity primitives we expect for people: authentication, authorization, scoped permissions, lifecycle management, auditability, and revocation.

The problem is, most enterprise IAM stacks were built for employees logging into SaaS apps. They weren't built for ephemeral software agents that act continuously, across cloud boundaries, without a human in the loop. Bolting agent identity onto those systems doesn't work. You end up with long-lived credentials that violate least privilege access, account sprawl, or worse, agents granted broad access because there's no better way to secure them.

What's needed is identity infrastructure purpose-built for this moment: a system that can issue credentials to agents; enforce policies based on agent intent and runtime context, not just static roles; understands that when an agent acts on behalf of a user, its permissions reflect that relationship; and provide full traceability so every action maps back to both the agent and the human who authorized it. That's not a feature addition to legacy IAM. It's a different category of security, and it's the missing piece that determines whether agentic AI becomes a force multiplier or a liability.

Ory's direction has always been that identity must be modern. And, modern means having flexibility and scale. It's not just users anymore. It's services and autonomous agents and they all need first-class identity with the same rigor you'd apply to your engineering team. If you can trust an agent's identity, you can let it safely make transactions, analyze business data for insights, optimize infrastructure, or accelerate deployments without waiting for human approval. That's how AI translates into real ROI instead of a compliance headache. If you can't trust it, every new agent becomes a risk.

Building the new internet

One of the Tailscale founders described their work as building a "new internet" and that entails fixing foundational problems without breaking what already exists. That framing resonated at re:Invent because it captures what the market is attempting. We're not just swapping vendors. We're redesigning the architecture beneath modern systems, and identity is the load-bearing layer.

Identity isn't a login page. It's the perimeter. It defines who can connect to what. It contributes to the audit trail that makes compliance possible. And increasingly, it's the trust boundary that allows autonomous software to operate securely at scale. The teams that understand that, and build for it, are the ones who'll define what enterprise software looks like over the next decade.

Ory is building the identity fabric for that future: scalable, distributed identity and access management (IAM) designed for humans and agents, where every actor is authenticated, every permission is scoped, and every action is traceable. That's how you turn the promise of agentic AI into operational reality without inheriting unacceptable risk. We're not just adopting the next wave of security. We're helping build it.

Is your enterprise building towards modern, agentic use cases? Contact us to discuss how Ory can help secure your AI agents.

Source:

Source: